-

Notifications

You must be signed in to change notification settings - Fork 12

Call for Prototype/Implementation Owners for Different GeoZarr Conformance Classes #63

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

|

Here are some other potential profiles, that might be considered new items or could be folded into items in the list above:

|

|

I volunteer for the SAR SLC and DEM. I believe it is important to define the ideal profile for access patterns that align with various use cases: simple screening, terrain correction, interferometry using topsar bursting passing, ... EDIT: and for multispectral, having different groups of resolution must be also addressed. |

|

I would add the GDAL multidimensional model, and at least be clear that "GDAL" (as above) usually means "2D classic raster" (setting aside the warper api and the geoocation frameworks), I don't think that's been considered. edit: I wrote a bit about it here, it's not much but I've only just got my teeth into it in recent weeks https://www.hypertidy.org/posts/2025-03-12-r-py-multidim/r-py-multidim |

|

oops, my apologies I see @christophenoel did cover this, very glad to see (awesome having this video and transcript!) |

|

@christophenoel could you share the slides of this presentation? |

the link to the slides has been shared in the public geozarr google group on https://groups.google.com/u/0/g/geozarr/c/9NbEa84BBSA and is https://drive.google.com/file/d/1zoIhQK-J4fSM3dsRdWXXXW9v57GrhjTi/view?usp=sharing |

|

I'd love to assist with the 3D raster feature. |

|

Thanks for sharing the link. I'm, Interested in the first four items, with a preference to start with the RGB case and a single-variable example initially. For info, I created branch cnl-examples with an initial RGB raster profile example in both Zarr V2 and Zarr V3 formats. The examples are provided in a Jupyter Notebook intended for automatic launch via MyBinder. You can test by creating the Jupyter environment simply by accessing the URL: binder |

|

Note: the V3 was created using an old library. I will fix this. |

|

I'm up for RGB,single, and DEM, especially where they overlap with VRT or GTI (COP30, GEBCO, terrain RGB) How about XYZT? Thredds servers via fileServer vs dodsC, there's a few good examples on NCI here don't know anything about hyperspectral 😀 |

|

While drafting the raster profiles and their examples, it became clear that some profiles—such as time-series-raster—serve best as complementary extensions to core 2D raster profiles (e.g. scalar-raster, rgb-raster). They add requirements for specific dimensions (e.g. time ) but do not redefine the overall structure. To maintain interoperability and simplicity, the number of combinations must remain limited. Excessive flexibility would increase complexity for applications and hinder standardisation efforts. 📦 These initial drafts are available in a dedicated branch cnl-examples, along with working examples:

📓 You can explore them directly in a Jupyter environment using Binder: 👉 Launch examples notebook |

|

Thank you @christophenoel for these examples already! @emmanuelmathot, @maxrjones and I chatted yesterday about how to address this work and I want to make sure folks volunteering aren't diverging too much in expectations. Can I propose folks who have volunteered to find a time to meet next week and discuss goals of this exercise? |

|

Thank you, @christophenoel. In the meantime, could you transform the cnl-branch into a PR to allow commenting on your input? |

|

@emmanuelmathot I prefer to wait until the work is split into distinct tasks. This approach avoids dealing with a large PR that generates scattered discussions. I think a branch for each profile should be created. Additionally, the current branch contains only early drafts. Note that I am preparing example for Zarr v3. The constraint concerning projected coordinates (projection_x_coordinate) seems overly restrictive and could be handled through an additional profile. |

|

I think |

|

I agree. But maybe rgb_raster can be a profile refining band_raster (which means: includes at least red, green, blue) |

|

Separate interpretation of sets of bands from their type I don't think we have to model ambiguity of ZT from sets of types. It's a convention of sorts to model colour vs time vs depth vs any arbitrary coordinate space TIFF can only specify grey, RGB, RGBa, multiband of any number of -type- I wonder if we're mixing GDAL heuristics with actual tiff models |

|

Hi @mdsumner , I'm not sure to understand to what you're replying exactly ? |

|

Bare with me, I think I'm so used to human-detection of interpretation I can't even imagine a standard for that |

|

To eliminate the ambiguity between data type and interpretation, the symbology extension (based on OGC symbology) proposed in the initial GeoZarr draft appears to offer a suitable solution. (Edit: however, a lot of GeoTiff would match this RGB profile, and allows detecting a possible mapping/export to GeoTiff.) |

|

@christophenoel @emmanuelmathot @mdsumner In the CNG #geozarr slack channel I posed some times for next week to chat. |

|

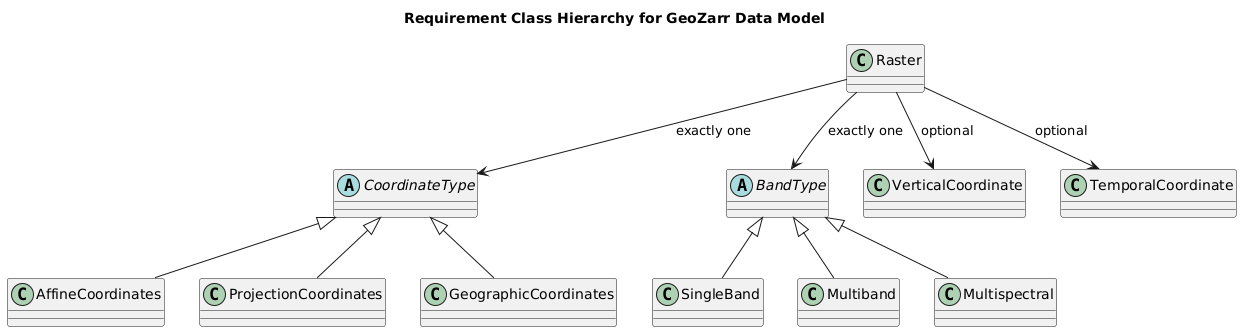

@christophenoel @emmanuelmathot @mdsumner @rabernat Defining a single profile to cover all kinds of rasters and datacubes is difficult. These datasets can include many different combinations—such as time, height, or wavelength—and can use either a projected or geographic coordinate system. In OGC, a profile is meant to tailor a standard for a specific use or community, not to describe every possible variation. From my point of view, a better approach is to use OGC conformance classes ((see conformance classes). These are clear, testable building blocks. Each dataset can declare which classes it follows—like “has time”, “uses projected coordinates”, or “includes multiple bands This makes it easier to describe what a dataset contains, and to check that it meets the expected rules. Instead of one big profile, each dataset is a combination of smaller, well-defined parts. |

|

Note: regarding the "meta model" spec approach, see the PR: #64 |

|

Here's some text on the approach I proposed at the last meeting GeoZarr Composable Conformance ClassesDefining a single profile to cover all kinds of rasters and datacubes is difficult. These datasets can include many different combinations—such as time, height, or wavelength—and can use either a projected or geographic coordinate system. The Four Dimensions of ProfilesGeoZarr datasets may be classified within a multi-dimensional space of options.

Examples

|

|

Thank you for kicking this off Ryan, this is a great foundation to build from! I am trying to think where sparse/ragged data, would fall into the existing table. It looks like CF conventions work: https://www.ncei.noaa.gov/netcdf-ragged-array-format, so perhaps there is another column of just 'n coord' where variable and 'n-coord' would fall under CF. Also trying to follow: pydata/xarray#7988 |

|

I like the idea of the "building block" options for constructing profiles, but I have some questions/comments on the current option descriptions. In regards to "multi-band raster" type, many imaging satellite data products may not fit this definition, because the pixel spacing ("resolution") and/or dtype differs between bands. For example, Landsat 9 has bands with 15m, 30m, and 100m pixels, and the bands have different data types (INT16, UINT16, UINT8). Could the data variable type dimension option be expanded to accommodate this, or would this require a satellite data product to be composed of multiple GeoZarr multi-band rasters? Also I'm not sure about the distinction between multi-band and hyper-spectral... multi-spectral satellite data products often have bands that have specific wavelength ranges, and this information is important when trying to harmonize bands between different sensors (example: Landsat 9 and Sentinel-2). |

They currently don't. What I wrote above is focused on dense rasters. Could you clarify the specific use case you have in mind here (e.g. an example from an existing data product)?

This is a good point Tyler. Zarr can't treat arrays of different shape or dtype as part of the same array. (Related perhaps to Brianna's comment about ragged arrays.) In this case, the different bands of different resolution would have to be stored as distinct arrays. In the CF coordinate model, they would also need distinct dimension coordinates. (Not sure how the GDAL raster coordinate model handles that case; is the affine transform the same?) But in summary, yes, we would need to modify this categorization to allow for this scenario.

I agree it's a fuzzy distinction. Is there an existing metadata convention that covers this somehow, e.g. in STAC? AFAIK CF does not. |

GDAL calls these subdatasets, and that case (can't be stored on the same array) is exactly when a container format will present as subdatasets. Each one then has its own crs and transform (these could be grouped together but will or won't be depending on driver details, I think) e.g. snipping out a few subdatasets from this file to show the range of array sizes (here unrolled as bands in GDAL classic mode for dims > yx), each "*_NAME=" here is a classic 2D raster with its own transform and crs gdalinfo "ZARR:\"/vsizip//vsicurl/https://eopf-public.s3.sbg.perf.cloud.ovh.net/eoproducts/S02MSIL1C_20230629T063559_0000_A064_T3A5.zarr.zip\""

...

Subdatasets:

SUBDATASET_1_NAME=ZARR:"/vsizip//vsicurl/https://eopf-public.s3.sbg.perf.cloud.ovh.net/eoproducts/S02MSIL1C_20230629T063559_0000_A064_T3A5.zarr.zip":/conditions/geometry/mean_viewing_incidence_angles

SUBDATASET_1_DESC=[13x2] /conditions/geometry/mean_viewing_incidence_angles (Float64)

SUBDATASET_2_NAME=ZARR:"/vsizip//vsicurl/https://eopf-public.s3.sbg.perf.cloud.ovh.net/eoproducts/S02MSIL1C_20230629T063559_0000_A064_T3A5.zarr.zip":/conditions/geometry/sun_angles

SUBDATASET_2_DESC=[2x23x23] /conditions/geometry/sun_angles (Float64)

...

SUBDATASET_3_NAME=ZARR:"/vsizip//vsicurl/https://eopf-public.s3.sbg.perf.cloud.ovh.net/eoproducts/S02MSIL1C_20230629T063559_0000_A064_T3A5.zarr.zip":/conditions/geometry/viewing_incidence_angles

SUBDATASET_3_DESC=[13x4x2x23x23] /conditions/geometry/viewing_incidence_angles (Float64)

...

SUBDATASET_7_NAME=ZARR:"/vsizip//vsicurl/https://eopf-public.s3.sbg.perf.cloud.ovh.net/eoproducts/S02MSIL1C_20230629T063559_0000_A064_T3A5.zarr.zip":/conditions/mask/detector_footprint/r10m/b08

SUBDATASET_7_DESC=[10980x10980] /conditions/mask/detector_footprint/r10m/b08 (Byte)

SUBDATASET_8_NAME=ZARR:"/vsizip//vsicurl/https://eopf-public.s3.sbg.perf.cloud.ovh.net/eoproducts/S02MSIL1C_20230629T063559_0000_A064_T3A5.zarr.zip":/conditions/mask/detector_footprint/r20m/b05

SUBDATASET_8_DESC=[5490x5490] /conditions/mask/detector_footprint/r20m/b05 (Byte)that's classic mode, in multidimensional mode it's a lot more like zarr groups and arrays |

|

@rabernat The specific dataset I was thinking of was an example was OCO-2. https://disc.gsfc.nasa.gov/datasets/OCO2_L2_Lite_FP_11.2r/summary?keywords=oco2 Any sounding type of dataset or level-2 product would be similar.

|

However a few thoughts:

|

Great example of additional requirement classes as this is a use cases we already faced when working with Zarr.

I would name them:

|

|

I added requirement-classes.ipynb to illustrate my current opinion about what requirements / conformances classes should be. The approach structures the specification into complementary and exclusive building blocks, offering flexibility while ensuring interoperability across diverse Earth Observation (EO) and environmental data products. At the core, the raster requirement class establishes the baseline for CF-compliant geospatial rasters. From this foundation:

This structure provides a flexible and rigorous foundation upon which further specialised requirement classes can be defined. Extensions may include, but are not limited to:

The goal is to offer a scalable standardisation approach: simple datasets can conform to minimal classes, while complex, high-level products can be described through aggregation and extension of the core building blocks. |

|

Christoph, in your hierarchy, where does weather and climate model data fit in? Or Level 4 data with variables like wind speed, temperature, etc? I would not call these data "rasters" and I would not describe the data variables as "bands". Do you feel that is out of scope for GeoZarr? |

|

Where in that framework does crs and non degenerate coordinates fit? Is i think the more relevant question here. xarray and Zarr need to define the standards, and elevate us from the legacy of CF, I don't see why that's not clear (?) |

In Christoph's framework, I believe "non-degenerate coordinates" (Michael's terminology) would correspond to the "AffineCoordinates" or "ProjectionCoordinates" options. CRS is mandatory for all datasets.

Simply abandoning CF is not feasible, as it is a mandatory standard for many data providers (e.g. CMIP). I'm not sure if that's what you're suggesting. Our intention is to leverage CF conventions wherever appropriate (not reinvent the wheel) while providing some additional options beyond CF (e.g. the affine coordinates) where needed. |

Maybe we should continue the discussion to have a better understanding of the above points, because I'm not sure I'm in line. |

@mdsumner I fully agree with @rabernat . Our customers and partners are supportive and keen to rely on CF wherever possible. As a compromise, the data model remains permissive (i.e. not strictly CF-compliant), but most of the requirement classes (which are optional) are expected to build upon CF conventions. |

|

@rabernat One final important point to move forward (apologies for the repeated messages): if there is a commonly used dataset or use case that does not fit within the initial set of requirement classes, we can then assess which additional classes would be appropriate and extend the class diagram accordingly. (?) |

|

I put the following in the agenda for today, but sadly won't be able to make the meeting so I'm copying here if anyone wants to discuss asynchronously. I observed at EGU that most geospatial use-cases for Zarr currently are simple translations (or virtualizations) from existing well-defined standards including OGC GeoTIFF/COG and NetCDF CF conventions. I'd like to propose that we prioritize a GeoZarr v1.0 release that includes conformance classes matching exactly existing CF and OGC standards with only the changes being those necessary to match the Zarr data structure and specification. I think that this path would allow us to move much quicker and prompt adoption from those already producing geospatial Zarr before next prioritizing features not directly supported by OGC GeoTIFF standards (i.e., n-dimensionality) or CF (i.e., functional coordinate representation). Here would be the steps for accomplishing this proposal:

This may be exactly what @christophenoel has already been proposing, admittedly I've been trying it hard to understand some of the discussions happening in this issue and how they would translate to a specification and implementations. |

|

A few clarifications may help ensure consistency with the ideas I shared:

The spec would provide requirement classes that allow data producers to advertise conformance to specific conventions (e.g. presence of lat/lon, CF compliance, multiscale support, affine transforms, time dimension, etc.). These classes are meant to be composable and declarative, rather than restrictive. Note: I’m not aiming to rush decisions on including or excluding topics from the specification. From my perspective, once the initial PR is reviewed and accepted, we should establish dedicated working groups for each topic (with dedicated PR) and observe which ones converge more quickly. The priority task seems to me to properly define the data model and its encoding into Zarr. The definition of requirement classes is secondary at this stage. |

Thanks for your clarifications and apologies for any terseness in my comments, I just want to rapidly share thoughts in advance of the meeting since I won't be there . I think we need two sources of input -

|

Uh oh!

There was an error while loading. Please reload this page.

In the April 2nd monthly meeting, @christophenoel gave a great presentation explaining abstract data models, file formats, and encodings. He gave great context explaining how HDF, CF, and GDAL work, and proposed a meta-model as a bridge to zarr. More importantly he identified that what we have struggled with most in GeoZarr is trying to resolve issues that stem from diverging abstract geospatial data models.

In this proposed unified data model, there would be specific Profiles (labeled in this slide as Feature Types, but the group agrees to move forward with the terminology of Profiles):

The group that attended the call agreed with this characterization and approach. To move this conversation forward, we want to identify point of contacts who will own a specific type of Profile that is desired to work with GeoZarr. These owners would be responsible for prototyping specific encodings in GeoZarr and support full round-trip translation between the existing data model implementation to GeoZarr and back.

Below is the working list of Profiles that we need to identify owners for - please add additional Profiles to this list and suggestions of the best people to engage with:

Once we have an agreed upon list of Profiles and identified potential owners, I suggest this focus group to meet at a more frequent interval than monthly to coordinate. Of course open to any suggestions and feedback!

The text was updated successfully, but these errors were encountered: